Apache Kafka Deep Dive: Broker Internals and Best practices

Introduction

Apache Kafka was developed at LinkedIn in 2010 by engineers Jay Kreps, Neha Narkhede, and Jun Rao to address the company’s need for a scalable, low-latency system capable of handling vast amounts of real-time data. At the time, LinkedIn’s existing infrastructure struggled to efficiently process the growing volume of user activity events and operational metrics generated across its platform. The team aimed to create a system that could ingest and distribute this data in real-time, facilitating both immediate processing and long-term storage for analytics.

The result was Kafka, a distributed publish-subscribe messaging system designed around a commit log architecture. This design choice enabled Kafka to offer high throughput, fault tolerance, and scalability, making it well-suited for real-time data pipelines. Kafka was open-sourced in early 2011 and entered the Apache Incubator later that year, achieving top-level project status in 2012.

Recognizing Kafka’s potential beyond LinkedIn, its creators founded Confluent in 2014, a company dedicated to providing enterprise-grade solutions and support for Kafka deployments. Today, Apache Kafka is widely adopted across various industries for building real-time data pipelines and streaming applications.

Apache Kafka’s journey from a simple log aggregation tool to a core component of modern event-driven architectures is rooted in both its technical design and the evolution of data-driven application needs.

Core Concepts: Understanding Kafka’s Building Blocks

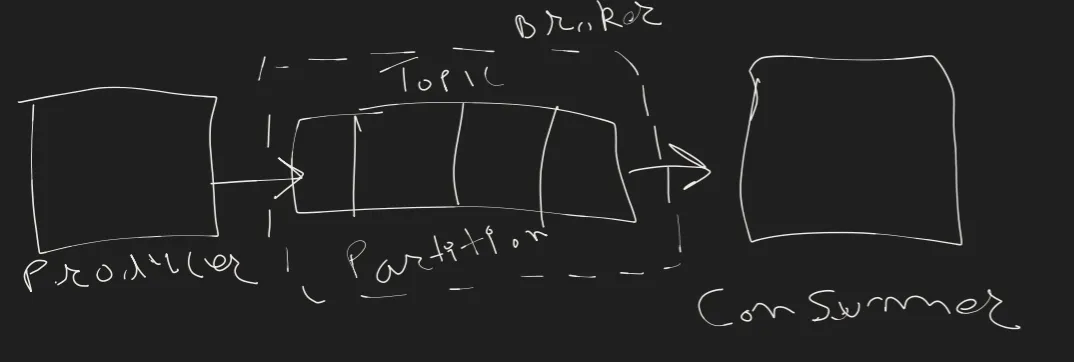

Producers and Consumers

- Producers are applications that publish data records to Kafka topics.

- Consumers subscribe to Kafka topics and process incoming messages.

Topics

A Kafka topic acts as a logical channel to which producers publish messages. Consumers subscribe to these topics to receive messages. Topics help organize data streams logically.

Partitions

Partitions are subdivisions within a topic, enabling parallelism and scaling. Kafka stores messages in these partitions, allowing multiple consumers to read data simultaneously, enhancing throughput.

Brokers

Kafka brokers are servers responsible for storing and managing data within partitions. They facilitate message reads and writes, handle replication, and ensure fault tolerance.

Internal Architecture: How Kafka Works Under the Hood

Message

- Message (or Record)

- Key (optional, helps with partitioning)

- Value (payload)

- Headers (optional metadata)

The producer decides which partition to send to:

- If a key is provided:

hash(key) % number_of_partitions - Otherwise, Kafka uses round-robin or custom logic.

Examples

Message (Record)

- Definition: The unit of data you send to Kafka.

- Example: An “OrderCreated” event when someone places an order.

Topic

- Definition: A named feed/category where related messages live.

- Example:

orderstopic holds all order-related events.

Key (optional)

- Definition: A value Kafka uses (by default) to choose the partition.

- Example:

Key = "order-1001" - Ensures all events for order 1001 go to the same partition (so they stay in order).

Value (Payload)

- Definition: The actual data you care about.

- Example: { “orderId”: 1001, “customerId”: “C123”, “items”: [ { “sku”: “ABC”, “qty”: 2 }, { “sku”: “XYZ”, “qty”: 1 } ] }

Headers (optional metadata)

- Definition: Extra bits of info about the message—Kafka doesn’t inspect them.

- Example event-source: mobile-app schema-version: v2 trace-id: 9f1b2c3d

Message Flow: From Producer to Broker

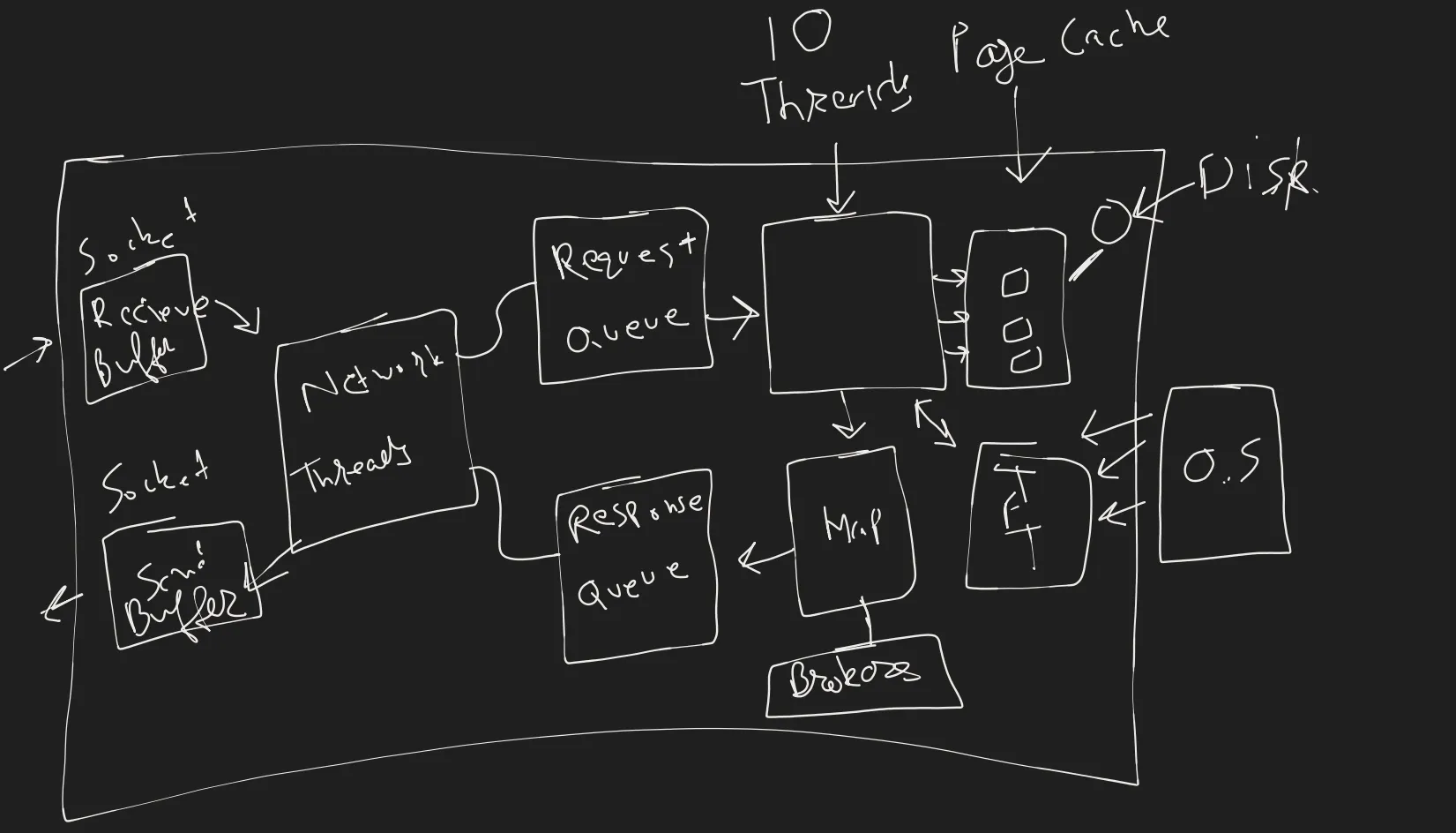

Socket Receive and Network Threads When a producer sends a message, it travels over the network and lands in the broker’s socket receive buffer. Kafka dedicates a network thread to each client connection; this thread handles the socket I/O for that client throughout its lifecycle, ensuring ordered processing of requests. The non-blocking I/O design allows the broker to multiplex dozens of client connections across a small number of threads, keeping each thread’s work light: reading request bytes, forming internal request objects, and enqueuing them for processing. Request Queue and I/O Threads Once the network thread enqueues a request, a pool of I/O threads picks up pending requests from a shared request queue. Each I/O thread validates the CRC checksum of the payload to ensure data integrity and then dispatches the record to the partition’s commit log for storage and replication.

Commit Log and Segments

Kafka’s core storage mechanism is the commit log, chosen for its simplicity, durability, and performance:

- Append-only design: Messages are never modified or deleted in place; new records are simply appended. This yields sequential disk writes, which are orders of magnitude faster and more efficient than random writes.

- Crash recovery: Because data is written as a linear sequence, recovering from broker crashes is straightforward—Kafka can replay the log to rebuild in-memory state reliably.

- Replayability: Consumers can rewind or reprocess messages by manipulating offsets, enabling event replay for auditing, debugging, or analytics.

Each log is organized on disk into sequential segments:

- Data File: An append-only sequence of messages in write order.

- Index File: A compact lookup structure mapping message offsets to their file byte positions, enabling quick random access by offset.

Replication and Acknowledgment

By default, a broker acknowledges the producer only after the message is durably replicated to all in-sync replicas. During this replication window, the I/O thread adds the request to an in-memory map of pending acknowledgments (commonly called “purgatory”), allowing the thread to free itself for other work.

Once replication completes, the broker generates a produce response and enqueues it in the response queue. A network thread then picks up the response, writes it to the socket send buffer, and sends it back to the producer, completing the request–response cycle.

Ordered Processing

Kafka enforces strict ordering on a per-connection basis: a network thread processes only one request per client at a time and does not read the next request until it has successfully sent the response for the previous one. This mechanism guarantees that each producer’s messages are processed in the exact sequence they were sent.

Consumer Flow: From Fetch Request to Zero-Copy Response

Fetch Request: Topic, Partition & Offset

The consumer issues a fetch request specifying:

- Topic name (e.g., orders)

- Partition number (e.g., 2)

- Offset (e.g., 150)

Socket Receive & Network Threads

The OS kernel places the incoming TCP packet into the broker’s socket receive buffer. A dedicated network thread reads one request at a time per client, preserving per-client ordering, and enqueues it into Kafka’s internal request queue.

I/O Threads & Index Lookup An I/O thread pulls the fetch request from the queue.

It uses the partition’s index file to map the requested offset (150) directly to the byte position in the log segment—skipping unnecessary file scans.

Long-Polling & Purgatory If no new data exists at that offset, Kafka adds the request to a purgatory map, waiting for: A minimum bytes threshold (e.g., 1 KB of new data), or A maximum wait time (e.g., 500 ms).

Once either condition is met, the request is resumed and a response generated.

Response Generation & Network Thread

The processed data is moved into a response object and queued for the network thread. The network thread then writes the response into the socket send buffer and returns it to the consumer.

Zero-Copy Transfer

Kafka leverages the OS sendfile syscall to copy data directly from the page cache (or log file) to the network socket, bypassing user-space copies:

- Page Cache Hits are extremely fast (in-memory reads).

- Cold Reads from disk may block the network thread briefly, impacting other clients on that thread.

Tiered Storage To prevent cold-data reads from degrading performance, Kafka can offload older log segments to a cheaper, external tier (e.g., object storage). Hot segments remain on local SSD for ultra-fast access, while cold segments are fetched on-demand without evicting critical pages or blocking main I/O threads.

Why Kafka’s Architecture Sets It Apart

- Append-Only Log: Sequential writes make disk I/O ultra-fast and allow any reader to replay history independently.

- Partitions: Add lanes to handle more shoppers without slowing each lane’s in-order service.

- Leader-Follower Replication: One ship loads cargo; others copy. If the captain ship sinks, another takes over seamlessly.

- Consumer Offsets: Each listener keeps its own bookmark, letting them listen independently without disturbing others.

- Retention Policies: Old bags auto-offload; new ones keep coming while reclaiming space predictably.

- Exactly-Once Semantics: Single, signed receipt per letter—no duplicates or lost mail.

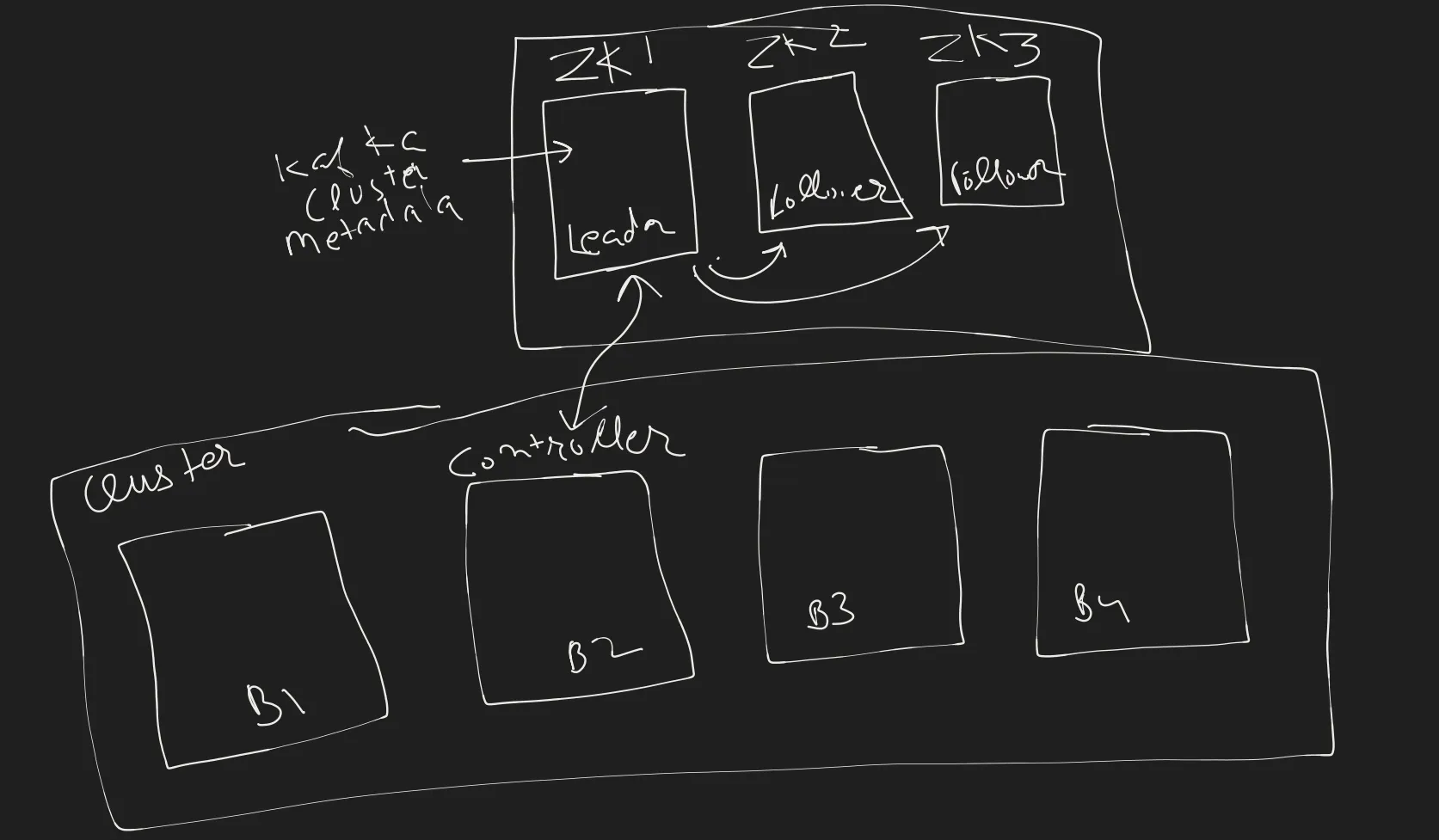

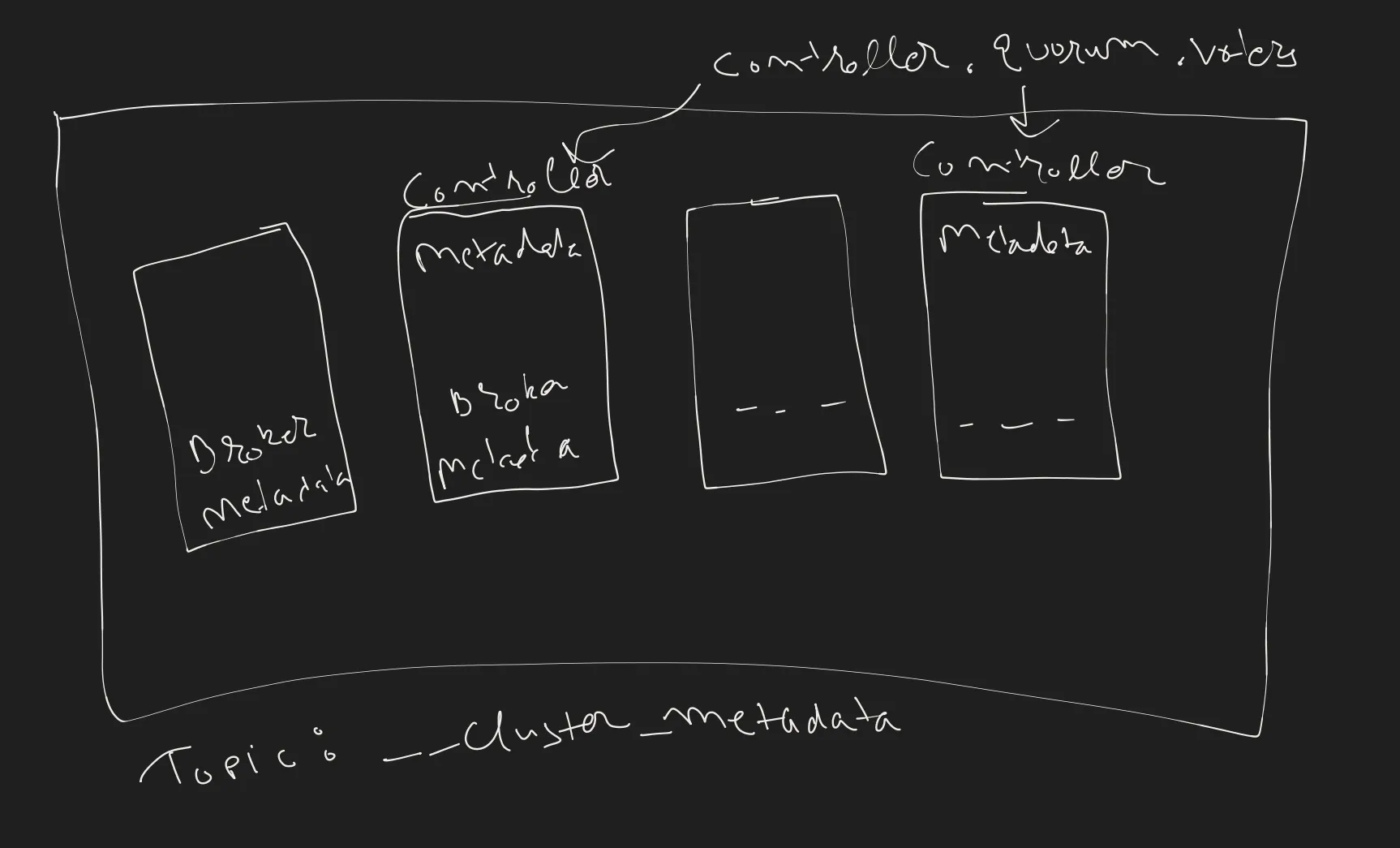

Cluster Metadata Management: Zookeeper vs KRaft

In earlier Kafka versions, cluster metadata—broker lists, topic configurations, partition assignments, and leader elections—were all managed by an external Apache Zookeeper ensemble. While powerful, this introduced operational overhead:

- A separate distributed system to maintain and monitor.

- Version compatibility and configuration mismatches.

- Slower cluster startup and metadata propagation.

Kafka’s new KRaft (Kafka Raft) mode embeds metadata management directly into Kafka brokers using the Raft consensus algorithm:

- Fewer Moving Parts → Simpler Operations

- Improved Scalability & Faster Recovery

- Efficient Metadata Propagation

Important Design Choices and Tradeoffs

Partitioning Strategy

- Random Partitioning: When ordering across messages doesn’t matter.

- Keyed (Hash-Based) Partitioning: When you must preserve order per business key.

- Manual Partitioning: When you need to shard known hot keys or enforce tenant isolation.

Replication Factor

- RF = 1 for non-critical or dev data (e.g., staging debug logs).

- RF = 2 for production dashboards where some data loss is tolerable.

- RF = 3+ for mission-critical streams (e.g., payments), tolerating two broker failures.

Throughput vs. Latency Tuning

Adjusting configurations like data compression, batching messages, and acknowledgments can optimize either throughput or latency.

- High Throughput: Compression=snappy, large batch.size, acks=1.

- Low Latency: Compression=none, linger.ms=0, small batches, acks=all.

- Balanced: Moderate compression, linger.ms≈5ms, acks=all.

Common Mistakes and Anti-Patterns to Avoid

- Large Messages (>1MB): Oversized messages burden brokers, affecting throughput and performance.

- Hot Partitions: Uneven key distribution can overload individual partitions, causing bottlenecks.

- Poor Retention Configurations: Mismanaged retention policies can cause unexpected data loss or excessive storage consumption.

When Not to Use Kafka

While powerful, Kafka isn’t the solution for every scenario:

- Simple Task Queues: Kafka may be overkill for low-volume, straightforward messaging; simpler systems like RabbitMQ might suffice.

- Request/Response Patterns: Kafka is optimized for streaming rather than synchronous interactions.

- Small-Scale Applications: The overhead of Kafka setup and maintenance might outweigh the benefits for small-scale or temporary applications.

Understanding Kafka’s strengths and limitations can help build effective, scalable systems tailored to specific needs.